Raspberry Pi Compute Cluster Build

Objective

The goal of this project was to setup a bare metal compute cluster on my local home network using Raspberry Pis to provide (relatively) cheap local compute to enable deploying applications as part of my regular development cycle. The main motivation came when developing other software projects; I found I often wanted to deploy them to a production-like environment and run the application persistently. Cloud compute (EC2) can get expensive, especially for experimentation, so having a local compute cluster, even if somewhat underpowered, is useful for being able to run applications.

This project wasn't undertaken with deploying any specific application in mind in mind, however there are a few interesting applications that I found along the way that I wanted to incorporate. In particular, I wanted to setup Tailscale to be able to remotely access the cluster and interact with it when working from outside the house. I wanted to deploy PiHole to help limit advertisements and tracking from my local network. For application deployment, I wanted to have a k3s cluster to run the applications. Eventually I want to incorporate object storage (MinIO) and monitoring solutions as well.

Hardware Details

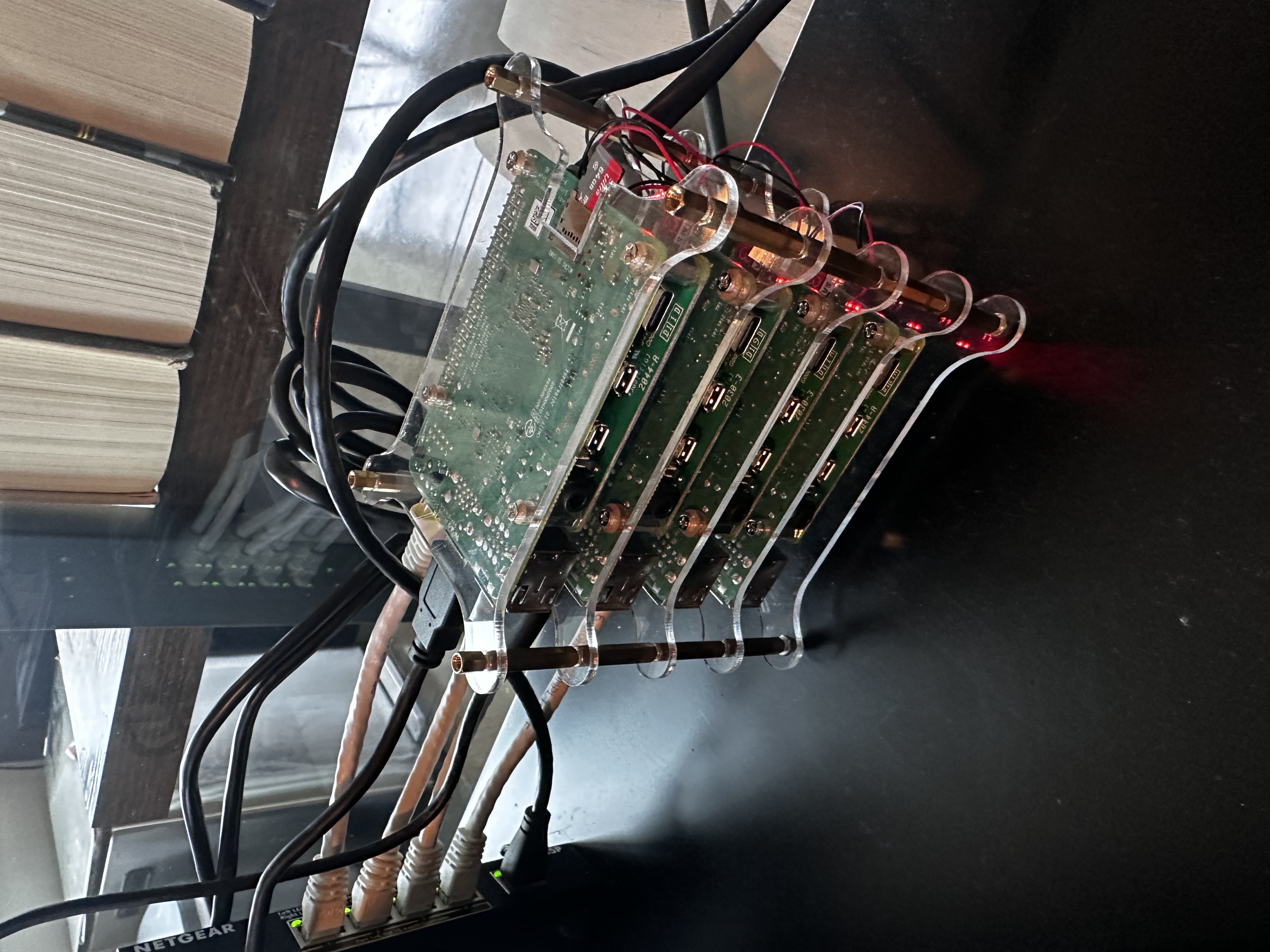

For the hardware cluster I wanted to keep things as simple as possible. This project also had a few false starts before the write up. So it uses some older parts. The main components are the 4 Raspberry Pi 4s, Pi Power-over-Ethernet hats, case, and ethernet switch. I wanted to make cable management as simple as possible, so I chose to use power over ethernet to power the cluster.

For storage, I initially used 4 micro sd cards, but after I started the setup, I read about how the frequent writes to disk that Kubernetes relies upon kill micro sd cards quickly, so it is ultimately preferable to use an external hard drive or SSD to provide storage. I didn't want to spend more money on this project than I had to until I proved out it's usefulness, so I pulled two old, unused USB hard drives to provide the storage for two of the Pis. I'm running the other two off sd cards, in part as an experiment to see how long it takes for them to fail. Since these drives are older, I'm sure they could be repleased with any similar USB drive solution.

All put together, this is what the cluster looks like

With all this put together and on-hand we're now ready to start the software build!

The parts list for this build

4x Raspberry Pi 4s (Though now I would go with the newer Raspberry Pi 5)

1x Cluster Case

4x SD Card

OS Setup

To get started with the software build we need to set an operating system installed on each of the Pis along with SSH keys loaded onto them so that they

Ultimately we want to prevent outages and data-loss by reducing micro sd card wear from running Kubernetes. There are a few ways we could do this, my using ramdisks for logging, make the microsd card read-only and use an secondary drive for storage and logging, but all of these significantly complicate the deployment and use of the cluster. Instead, we'll go with a simpler solution and install the operating system on the USB hard drives directly and boot the pi from the drive. There is a problem however-the default firmware doesn't attempt to boot from attached USB drives. Therefore we need to update the firmware on all of our pis so that they attempt to boot from USB drives first. The updated firmware will fall back to boot from the SD card if no USB is found, so there is no issue in updating all pis so that we can simply add in USB drives as we want. We only need to create a single SD card flashed with the bootloader updater.

Bootloader Update

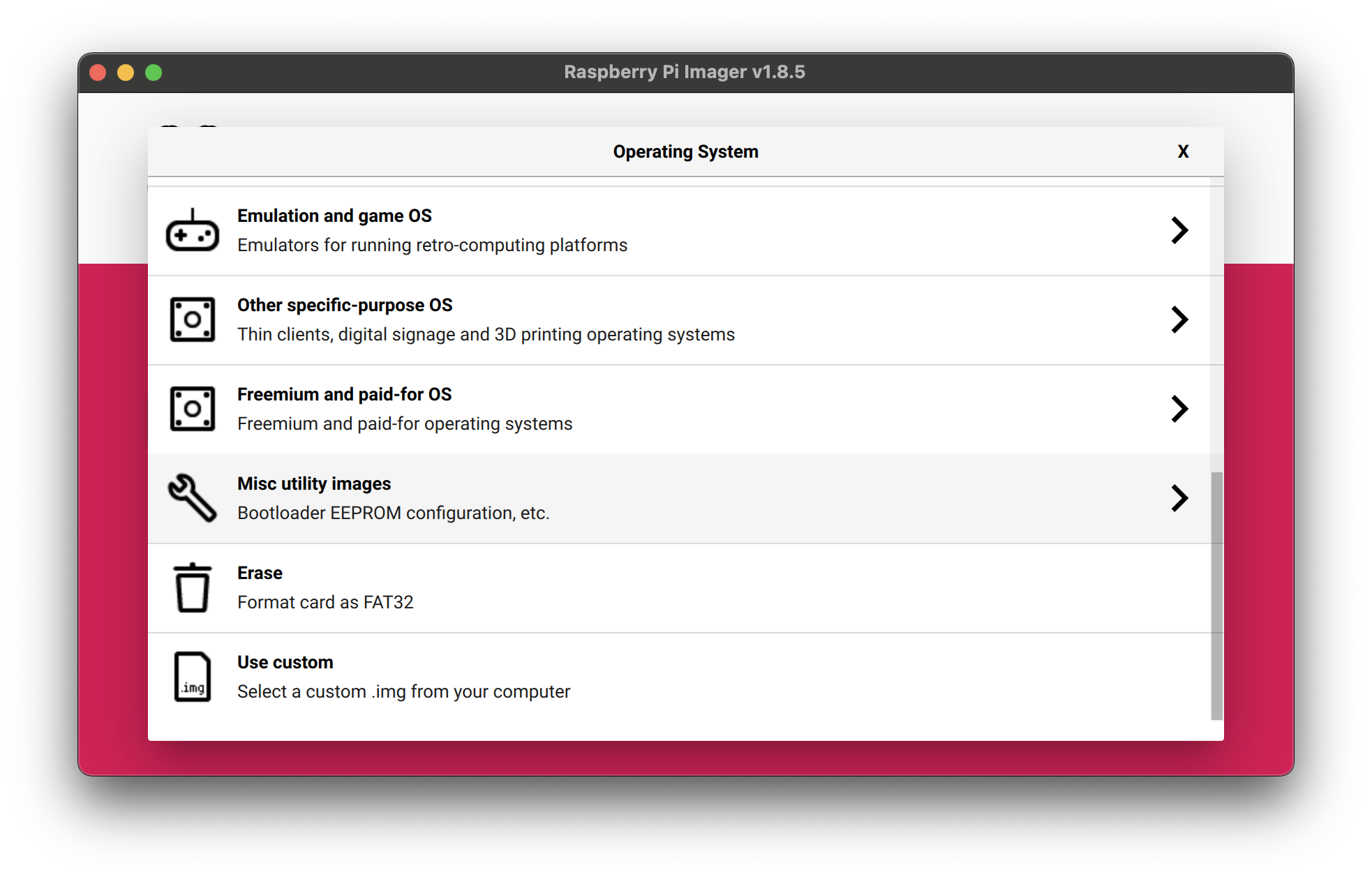

To start, we first install the Raspberry Pi Imager

Next insert the micro SD card into the computer

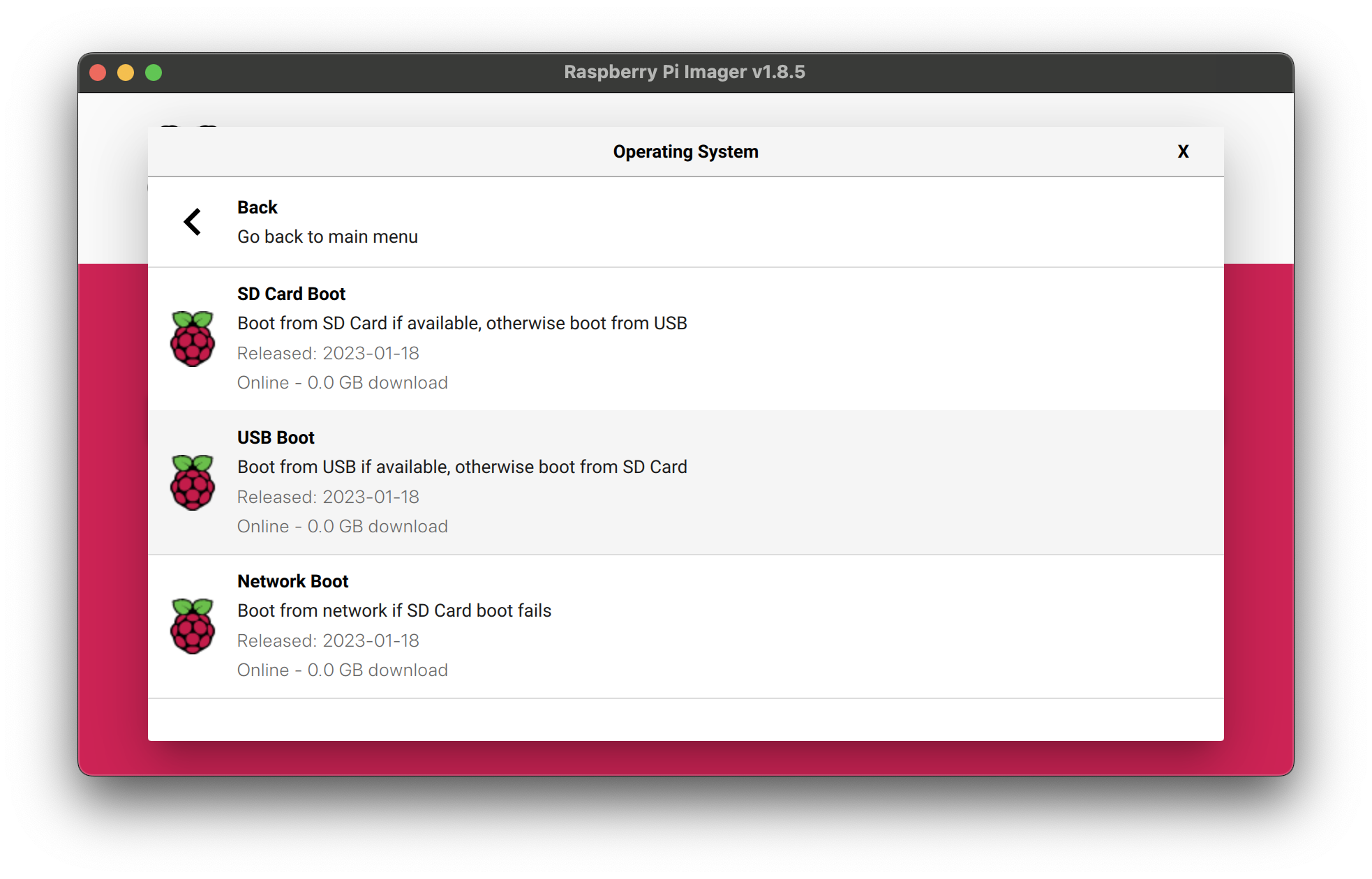

Under "Operating System" select "Misc ultility images"

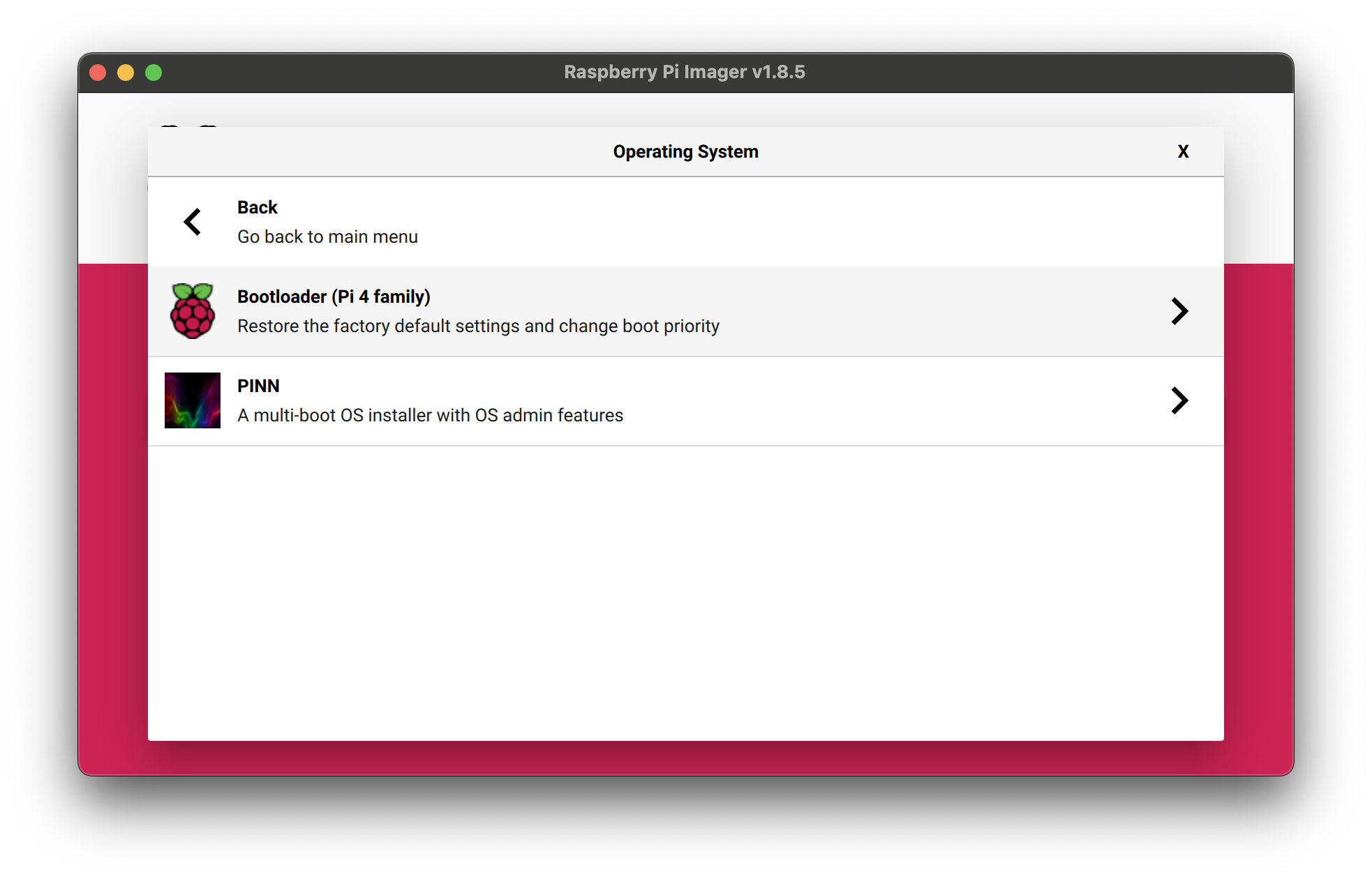

Select Rasperry Pi

Select USB Boot

Select the micro sd card store and click "next" to start the flashing process.

To complete flashing insert the sd card into the pi and power it on. The green activity light will blink a steady, regular pattern after the the udpate has completed. Though you should wait at least ten seconds to ensure flashing completed successfully. Power off the pi and remove the sd card.

Complete the flashing process using the same card on all pis in the cluster.

SSH Key Generation

Before we start the process of flashing the SD cards and USB drives with the desired linux image, we are going to want to create an SSH key to enable us to remotely access the devices from their first boot. To do this we

Create an SSH key using the command

ssh-keygen -t ed25519 -C "rpi_cluster"Follow the prompts as directed. Do not add a password (unless you really want the added security)

Save the key to

/Users/YOUR_USERNAME/.ssh/rpiThis SSH key will be used across all raspberry pis in the cluster to make them easy to access. Should you want more security isolation different a different key can be generated for each pi. However, since this is just an experimental cluster a single key will suffice for my purposes.

OS Image Flashing

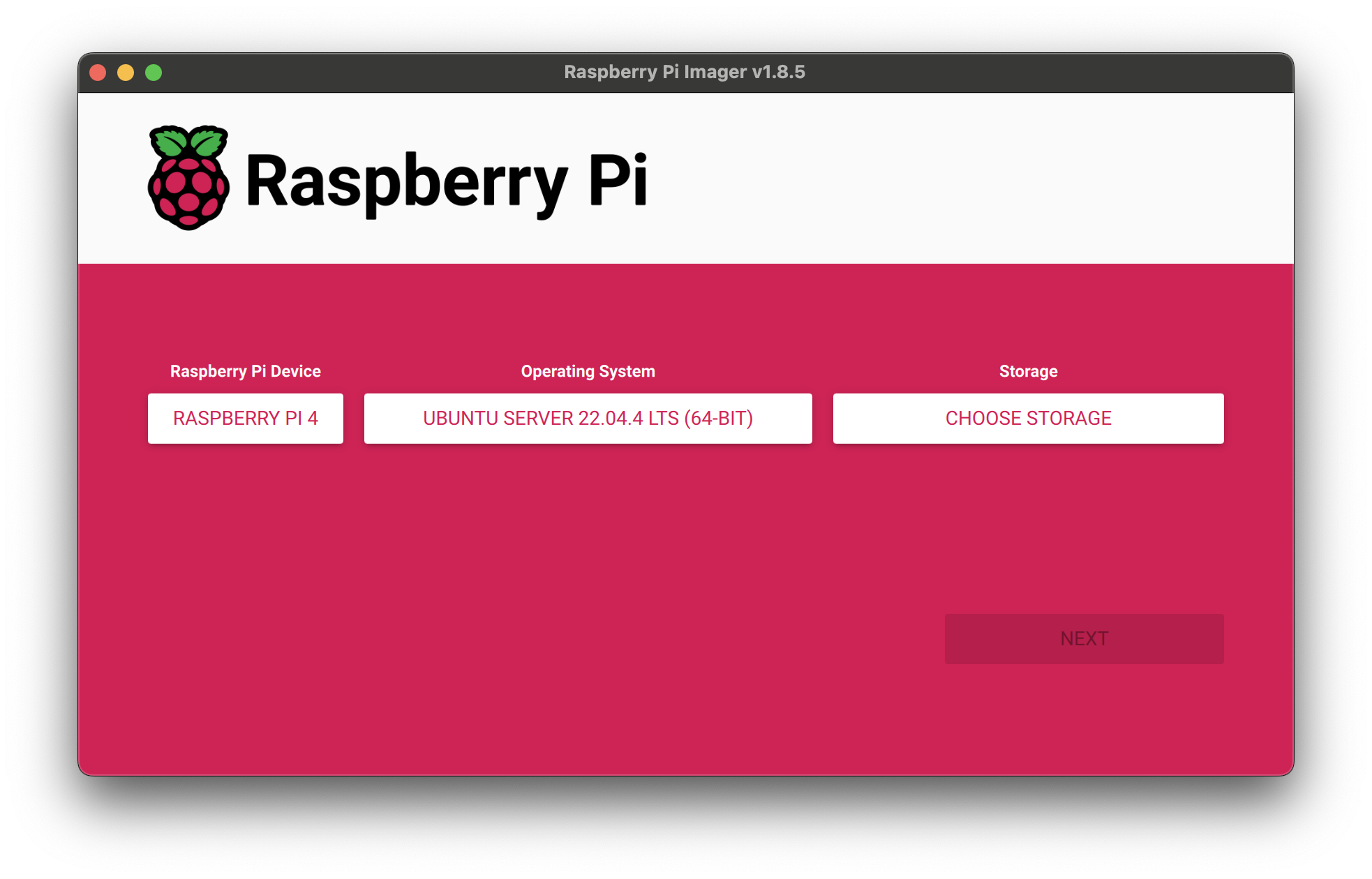

At this point we're now ready to flash the primary storage media (USB drive or sd card) for each pi with the desired linux image. I'll be usuing Ubuntu Server 22.04 due to its wide adoption for similar projects. This process is repeated for each usb drive for SD card used to provide the storage for a Pi.

Open the same Raspberry Pi Imager used to install the bootloader

Select the operating system you want to install. For my cluster I used Ubuntu 22.04 LTS Server edition. The server edition is lighter weight, omitting many packages and software from the default desktop version, which is what we want since we are planning to install most of this ourself.

Select the drive you want to install on and hit "next". Click "customize Settings". Here we can set a few helpful things so that the base install images comes with the username, password, and SSH keys already setup so we can immediately log in and start using the server after boot.

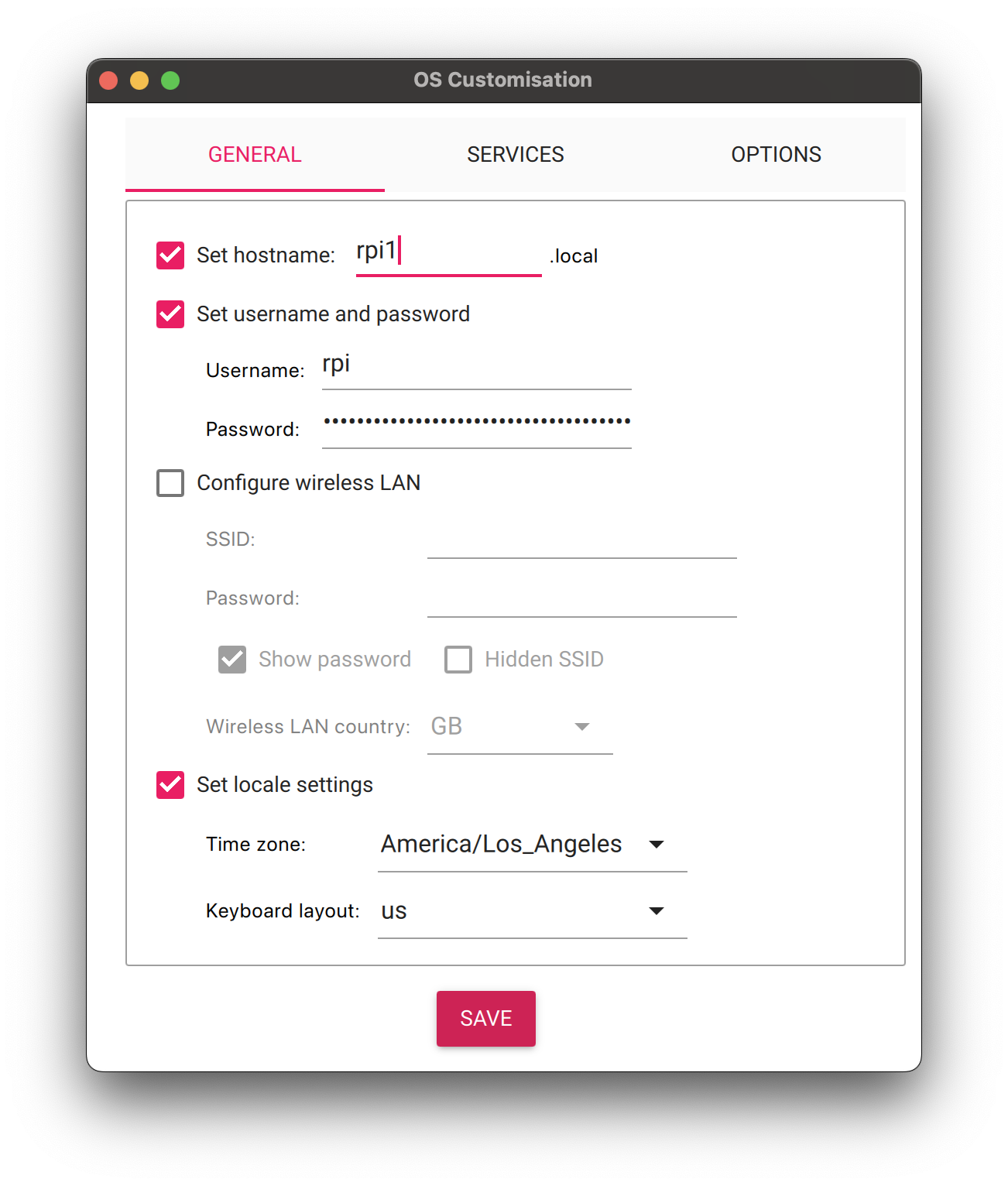

Under "General" We set the following customization settings

Set the host name to your hostname of choice. I used

rpi1,rpi2,rpi3, andrip4Set the username and password. I used

rpion all devices to standardize the loginSet the time zone to your current timezone

Next select OS Customizations - Services

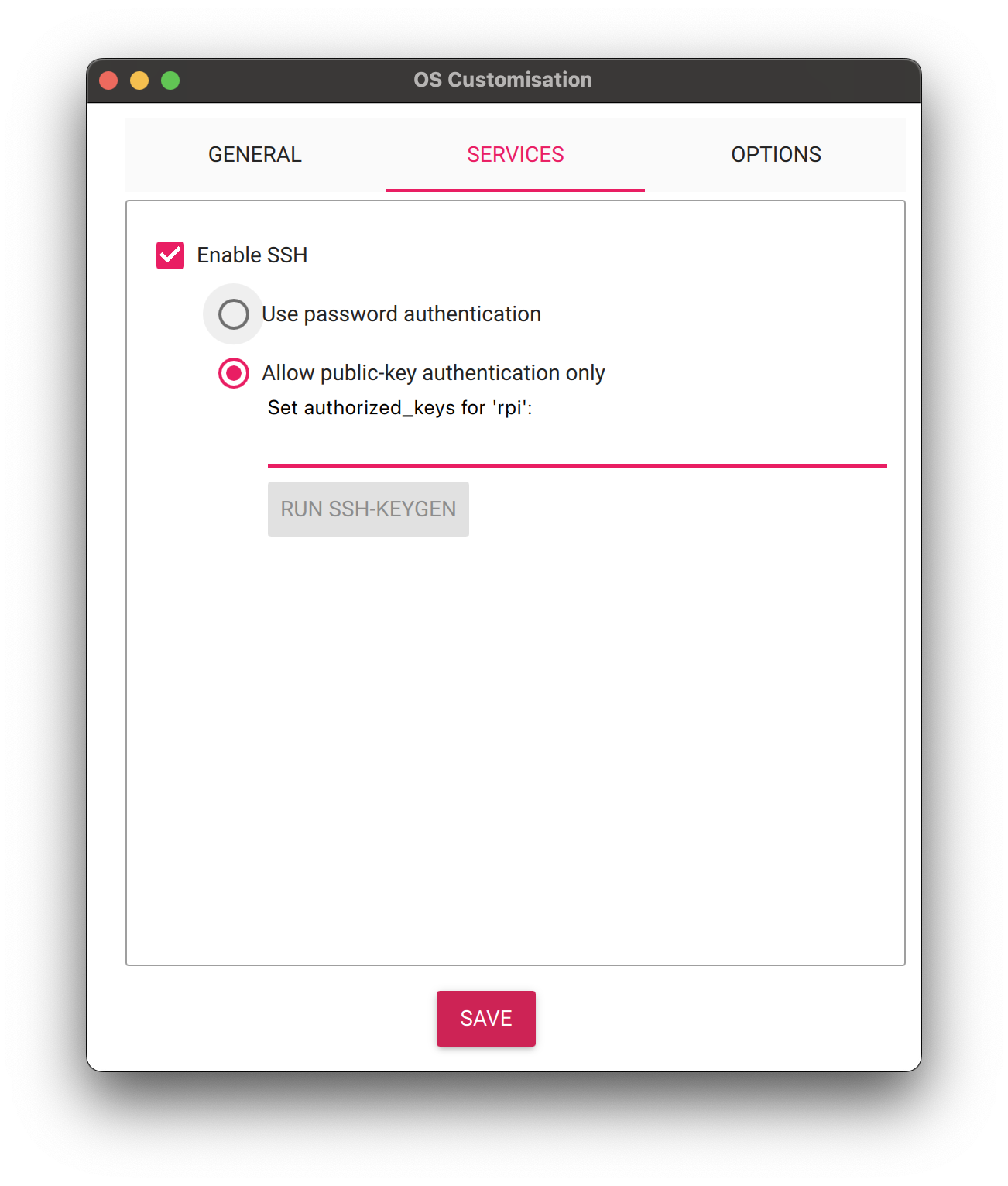

Enable SSH

Get the SSH public key (file ending in

./pub) generated perviously. On MacOS you can do this with the commandcat ~/.ssh/rpi.pub | pbcopy

3. Paste the public key in the section below

Click Next to start writing.

We're now good to log into the device! Plug all the storage media in and power on your Pis.

Wait a little bit and check your router for the devices to show up added to your home network and find what their ip-addresses are. Then you can ssh into them using

# Change IP address to mach what your pis came up on

ssh -i ~/.ssh/rpi [email protected]On the first login you will run into a message like

The authenticity of host '192.168.1.101 (192.168.1.101)' can't be established.

ED25519 key fingerprint is SHA256:XXXXXXXXXXXXX/XXXXXXXXXXXXXXXXXX.

This key is not known by any other names.

Are you sure you want to continue connecting (yes/no/[fingerprint])?Type yes to continue and now you're in! You should see the wonderful login screen of success

Welcome to Ubuntu 22.04.4 LTS (GNU/Linux 5.15.0-1053-raspi aarch64)

* Documentation: https://help.ubuntu.com

* Management: https://landscape.canonical.com

* Support: https://ubuntu.com/pro

System information as of Tue Apr 23 14:44:06 PDT 2024

System load: 0.1904296875

Usage of /: 0.5% of 687.55GB

Memory usage: 3%

Swap usage: 0%

Temperature: 50.6 C

Processes: 151

Users logged in: 0

IPv4 address for eth0: 192.168.1.101

IPv6 address for eth0: fdaf:56fe:125e:abca:dea6:32ff:fed6:4539

IPv4 address for tailscale0: 100.76.243.9

IPv6 address for tailscale0: fd7a:115c:a1e0::b701:f309

* Strictly confined Kubernetes makes edge and IoT secure. Learn how MicroK8s

just raised the bar for easy, resilient and secure K8s cluster deployment.

https://ubuntu.com/engage/secure-kubernetes-at-the-edge

Expanded Security Maintenance for Applications is not enabled.

0 updates can be applied immediately.

Enable ESM Apps to receive additional future security updates.

See https://ubuntu.com/esm or run: sudo pro status

Last login: Tue Apr 23 14:41:10 2024 from 192.168.1.3

rpi@rpi1:~$Quality-of-Life Improvements

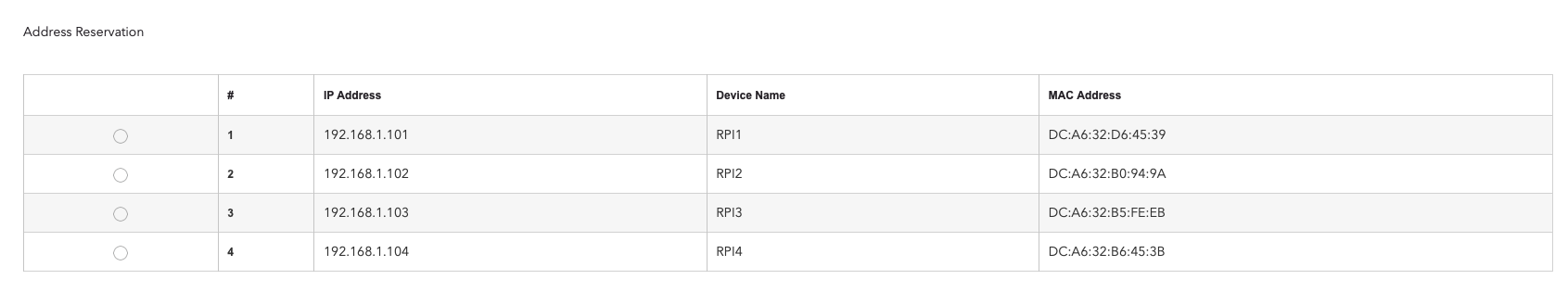

Now before we go further setting up the cluster, it is helpful to do some work to set up some improvements to make accessing the Pis easier. In particular, we're going to assign all the pis static IP addresses so make where you access them predictable, this in turn enables setting up SSH Hosts file and . This is also a pre-requisite of deploying pi-hole to the devices later.

Setting a Static IP Address

The first step it to set static IP addresses on your router for each of the pis. How this is done is slightly different, but on my Netgear Nighthawk RAX70 I was able to set it

You should see something similar to the below on your own router when you have done this

After this host file is set, ssh into each pi and restart it with

sudo rebootso that all pis come up with their new static IP addresses.

Creating an SSH Config File

The next quality of life improvement is to create an ssh config file that enables us to quickly get into each pi without needing to explicity pass in the key or type in the IP addresses for each pi. This is configured by making sure there is an ssh configuration file at ~/.ssh/config.

If you don't have an SSH config (which you can confirm by inspecting the output of ls ~/.ssh), you can simply create one with

touch ~/.ssh/configNow add (using your preferred editor) the following lines to your config file

#... other SSH Configs

Host rpi1

IdentityFile ~/.ssh/rpi

HostName 192.168.1.101 # NOTE: Replace with your static IP Address

User rpi

Host rpi2

IdentityFile ~/.ssh/rpi

HostName 192.168.1.102

User rpi

Host rpi3

IdentityFile ~/.ssh/rpi

HostName 192.168.1.103

User rpi

Host rpi4

IdentityFile ~/.ssh/rpi

HostName 192.168.1.104

User rpiNow we can log in to our pis with commands like

ssh rpi@rpi1Isn't that easier!

Configuring Ansible

The next we're going to setup and Ansible repository to be able to programatically manage the configuration of the clsuter as well as help automate the installation of software across multiple machines. Use of ansible is likely a bit overkill, but it's a good exercise in getting familiar with what can be quite a useful tool for standardizing repetivite tasks to ensure consistency and reproducibility when executing the same workload on multiple machines. It's a good tool to have in your developer tool belt.

To get started we need to do three things:

Install ansible

Define a hosts file that tells Ansible which manchines to manage

Create a simple playbook

You can also look at the github repo containing this all already setup here.

To install ansible, I assume you already have a python environment setup. You can simply install the necessary packages with

pip install ansible ansible-lintNext we need to create a hosts file that tells ansible which machines to manage. This file is typically located at /etc/ansible/hosts per the Ansible Inventory documentation but can be located anywhere. I chose to put it in the root of my ansible repository in hosts.yaml, so that the host configuration and files to execute them are tightly managed and both version controlled. For this cluser the hosts file looks like

all:

hosts:

rpi1:

ansible_host: rpi1 # 192.168.1.101

ansible_user: rpi

ansible_ssh_private_key_file: ~/.ssh/rpi

rpi2:

ansible_host: rpi2 # 192.168.1.102

ansible_user: rpi

ansible_ssh_private_key_file: ~/.ssh/rpi

rpi3:

ansible_host: rpi3 # 192.168.1.103

ansible_user: rpi

ansible_ssh_private_key_file: ~/.ssh/rpi

rpi4:

ansible_host: rpi4 # 192.168.1.104

ansible_user: rpi

ansible_ssh_private_key_file: ~/.ssh/rpiThe above short-hand hostnames assumes that you have the SSH config file setup as described above. If you don't have that setup, you can replace the ansible_host with the IP address of the pi.

We now have everything ready to do a simple test to confirm ansible is working by using it to ping all the hosts in the cluster. To do this we can run the command

ansible all -m pingwhich should return something like

rpi4 | SUCCESS => {

"ansible_facts": {

"discovered_interpreter_python": "/usr/bin/python3"

},

"changed": false,

"ping": "pong"

}

rpi3 | SUCCESS => {

"ansible_facts": {

"discovered_interpreter_python": "/usr/bin/python3"

},

"changed": false,

"ping": "pong"

}

rpi2 | SUCCESS => {

"ansible_facts": {

"discovered_interpreter_python": "/usr/bin/python3"

},

"changed": false,

"ping": "pong"

}

rpi1 | SUCCESS => {

"ansible_facts": {

"discovered_interpreter_python": "/usr/bin/python3"

},

"changed": false,

"ping": "pong"

}With this in place we can now write our first playbook to update all the packages on the pis. This playbook will be a simple one that runs the apt update and apt upgrade commands on all the pis in the cluster. To do this we create a file called update_packages.yaml in a new playbooks directory in the root of the ansible repository. The contents of the file are

- name: Update all packages

hosts: all # Target All machines

become: true # Use privilege escalation (sudo)

tasks:

- name: Update apt package cache

ansible.builtin.apt:

update_cache: true

- name: Upgrade all installed packages

ansible.builtin.apt:

upgrade: trueNow we can run this playbook with the command

ansible-playbook -i hosts.yaml playbooks/update_packages.yamlThis will update all the packages on all the pis in the cluster, though it may take a little bit of time to run so be patient.

With this we have everything setup to write more complex playbooks to manage the cluster in the future.

Before moving on however, there is one last useful command worth mentioning, the command to restart all the pis in the cluster. This can be done with the command

ansible all -a "/sbin/reboot" --becomeInstall Tailscale

As the final part of setting up the cluster. We're going to install Tailscale on all the nodes. Tailscale is a really cool product built on top of Wireguard that allows you to easily and quickly create a private network between all your devices. This is particularly useful since it allows you to easily access the cluster from outside your home network without needing to setup port forwarding on your router, potentially exposing your home network to external security threats.

For the purposes of this setup, we'll assume you've already created a Tailscale account. If you haven't, you can do so here.

Note, this setup cannot be easily done via ansible since the last setp requires the user to follow a link from the remote session to authenticate the device. So we can perform the installation manually on each pi.

Following the installation instructions from the Tailscale website, we can install Tailscale on each pi with the following commands

sudo mkdir -p --mode=0755 /usr/share/keyringscurl -fsSL https://pkgs.tailscale.com/stable/ubuntu/jammy.noarmor.gpg | sudo tee /usr/share/keyrings/tailscale-archive-keyring.gpg >/dev/nullcurl -fsSL https://pkgs.tailscale.com/stable/ubuntu/jammy.tailscale-keyring.list | sudo tee /etc/apt/sources.list.d/tailscale.listsudo apt-get update && sudo apt-get install -y tailscaleNext, we start the tailscale service with, note we add the flag --ssh to enable SSH access to the device.

sudo tailscale up --sshWe can test that the installation was successful by sshing into the device using tailscale ssh with

tailscale ssh rpi@rpi1You can then use this command to access your pis from anywhere in the world, which is pretty cool!

Pi-Hole Installation

Pi-hole is a DNS sinkhole that can protect devices on your home network from unwanted content, especially ads. While it is possible to run pi-hole more resiliently using Kubernetes and a containerized deployment, that complicates the networking and router configuration significantly. Instead, I simply installed pi-hole directly onto my rpi1 host using

curl -sSL https://install.pi-hole.net | bashAnd followed the post-install instructions to configure my router to use the pi-hole as the DNS resolver for devices on my network.

k3s Installation

With all this in place, we're now ready to install kubernetes on the cluster. For this we're going to use k3s which is a lightweight kubernetes distribution.

Control Node Setup

First, we're going to install k3s on the main node (rpi1) with

curl -sfL https://get.k3s.io | sh -s -After the command exits we check that it's running with

➜ systemctl status k3s.service

● k3s.service - Lightweight Kubernetes

Loaded: loaded (/etc/systemd/system/k3s.service; enabled; vendor preset: enabled)

Active: active (running) since Sat 2024-04-27 23:04:36 PDT; 10s ago

Docs: https://k3s.io

Process: 2777 ExecStartPre=/bin/sh -xc ! /usr/bin/systemctl is-enabled --quiet nm-cloud-setup.service 2>/dev/null (code=exited, status=0/SUCCESS)

Process: 2779 ExecStartPre=/sbin/modprobe br_netfilter (code=exited, status=0/SUCCESS)

Process: 2780 ExecStartPre=/sbin/modprobe overlay (code=exited, status=0/SUCCESS)

Main PID: 2785 (k3s-server)

Tasks: 29

Memory: 519.7M

CPU: 48.613s

CGroup: /system.slice/k3s.service

├─2785 "/usr/local/bin/k3s server"

└─2805 "containerd"We're now going to setup some configuration so that we can remotely adminster the cluster. Starting by copying configuration files needed to access the cluster your macihine.

mkdir -p ~/.kubeNext, manually copy (via scp or ssh/less) the master node config at /etc/rancher/k3s/k3s.yaml to the local location ~/.kube/config

We now have to modify the clusters[0].cluster.server value in ~/.kube/config from https://127.0.0.1:6443 to the IP address of our node. In this case it is https://192.168.1.101:6443

Next, we're going to install the kubernetes CLI and Helm via

brew install kubectl helmAnd we can confirm all this setup works by getting the node status with

➜ kubectl get node

NAME STATUS ROLES AGE VERSION

rpi1 Ready control-plane,master 8m26s v1.29.3+k3s1Worker Node Setup

We are now ready to setup all of the worker nodes. First, on the main control node get the master token with

sudo cat /var/lib/rancher/k3s/server/node-tokenAnd on each of the worker nodes we run the following command, replacing MASTER_IP with the rpi1 IP address and K3S_TOKEN with the above value

curl -sfL https://get.k3s.io | K3S_URL=https://[MASTER_IP]:6443 K3S_TOKEN="[K3S_TOKEN]" sh -Next we can run kubectl get node again to confirm that all nodes install and started successfully

➜ kubectl get node

NAME STATUS ROLES AGE VERSION

rpi1 Ready control-plane,master 17m v1.29.3+k3s1

rpi2 Ready <none> 10s v1.29.3+k3s1

rpi4 Ready <none> 2s v1.29.3+k3s1

rpi3 Ready <none> 1s v1.29.3+k3s1And with this we're in business!

Additional Setup

We're going to do some additional setup. First we're going to label all our worker nodes so that they have roles we can refer to if needed. To do this we run

kubectl label nodes rpi2 kubernetes.io/role=worker

kubectl label nodes rpi3 kubernetes.io/role=worker

kubectl label nodes rpi4 kubernetes.io/role=workerAnd should now see

➜ kubectl get node

NAME STATUS ROLES AGE VERSION

rpi1 Ready control-plane,master 20m v1.29.3+k3s1

rpi2 Ready worker 3m40s v1.29.3+k3s1

rpi3 Ready worker 3m31s v1.29.3+k3s1

rpi4 Ready worker 3m32s v1.29.3+k3s1Network Setup

The final bit of setup we're going to do is install MetalLB to provide network load balancer functionality so that services deployed into the cluster can be provisioned with local IP addresses on the network. Without MetalLB services deployed would not otherwise be reachable on the local (home) network. Without this services which request an IP address will say in a pending state forever.

This can be observed in the out-of-the-box k3s distribution, which includes traefik for routing.

➜ kubectl get svc -A

NAMESPACE NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

default kubernetes ClusterIP 10.43.0.1 <none> 443/TCP 37m

kube-system kube-dns ClusterIP 10.43.0.10 <none> 53/UDP,53/TCP,9153/TCP 37m

kube-system metrics-server ClusterIP 10.43.87.78 <none> 443/TCP 37m

kube-system traefik LoadBalancer 10.43.51.12 <pending> 80:30234/TCP,443:32038/TCP 36mNotice how the traefik EXTERNAL-IP is listed as <pending>? That means that it has requested an IP address, but there is no provisioner that can provide one. To do this we follow the helm installation steps

helm repo add metallb https://metallb.github.io/metallbthen

helm install metallb metallb/metallb --create-namespace --namespace metallb-systemThis command slightly modifies the default installation command by adding --create-namespace --namespace metallb-system. This installs metallb in a separate namespace which makes managing resoruces associated with it easier.

We now need to do some configuration, specifically telling the MetalLB installation, which IP addresses it can hand out. To do this we create the configuration file metallb_address_pool.yaml containing

apiVersion: metallb.io/v1beta1

kind: IPAddressPool

metadata:

name: default-pool

namespace: metallb-system

spec:

addresses:

- 192.168.1.200-192.168.1.250We also make an L2 advertisement configuration file named metallb_l2_advertisement.yaml with

apiVersion: metallb.io/v1beta1

kind: L2Advertisement

metadata:

name: default

namespace: metallb-system

spec:

ipAddressPools:

- default-poolWe apply these configurations with

kubectl apply -f ./k8s/metallb_address_pool.yamlthen

kubectl apply -f ./k8s/metallb_l2_advertisement.yamlNow when we check the status of internal services we can see that traefik has had an IP address outof the range we specified provisioned for it.

➜ kubectl get svc -A

NAMESPACE NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

default kubernetes ClusterIP 10.43.0.1 <none> 443/TCP 39m

kube-system kube-dns ClusterIP 10.43.0.10 <none> 53/UDP,53/TCP,9153/TCP 39m

kube-system metrics-server ClusterIP 10.43.87.78 <none> 443/TCP 39m

metallb-system metallb-webhook-service ClusterIP 10.43.117.244 <none> 443/TCP 76s

kube-system traefik LoadBalancer 10.43.51.12 192.168.0.200 80:30234/TCP,443:32038/TCP 38mLinks

As with all good projects I learned heavily from the work and writings of others, the main resources I used in this work were:

https://www.tomshardware.com/how-to/boot-raspberry-pi-4-usb

https://www.jericdy.com/blog/installing-k3s-with-longhorn-and-usb-storage-on-raspberry-pi

https://rpi4cluster.com/

With some others that i came across:

https://chriskirby.net/highly-available-pi-hole-setup-in-kubernetes-with-secure-dns-over-https-doh/

The source code for scripts and other tools utilized in this blog or otherwise can be found on the associated project GitHub page.

Built with Franklin.jl, inspired by https://robert-moss.com/